Exploring AI Integrations with Adobe Photoshop, InDesign and Premiere Pro

As I expect most people in the tech industry have been doing, I’ve been spending a lot of time lately thinking about AI and its impact. As I work with the community for Adobe, I’ve specifically been thinking about how it will affect the creative industry, and its implications for Adobe and Adobe’s creative tools.

Adobe makes industry leading creative tools such as Photoshop, Illustrator and Premiere Pro, and I have been curious about exploring how / if these tools fit in within an AI first world. While I definitely have some thoughts on that (I’ll save that for another post), I wanted to start with the basics and explore how and if Adobe tools could be integrated with AI tools. Questions like:

- Can they be integrated with and controlled by AI?

- What types of roles and tasks would it be useful for?

- Could AI be used as a glorified scripting language?

- Could it be used to do the actual creation (similar to generative AI)?

- What are the barriers given current technical constraints to adoption?

There has been a lot of buzz around MCP servers over the past couple of months with integrations with other creative tools / frameworks like Blender and Unity, that showed that AI could be used to drive these types of creative workflows. I started to look at whether it would be possible to hook up Adobe apps such as Photoshop in a similar way and it turns out that yes, you can! And even better, everything needed is publicly available.

Adobe Creative App MCP Server

adb-mcp

Introducing adb-mcp, an open source project that provides MCP servers to enable AI clients and agents to control Adobe Photoshop, Adobe Premiere Pro and Adobe InDesign.

https://github.com/mikechambers/adb-mcp

Currently, there are plugins for three Adobe apps:

- Adobe Photoshop : Has the most functionality exposed

- Adobe Premiere Pro : UXP support is in beta, and thus mostly just basic functionality is exposed

- Adobe InDesign : Has extensive UXP support, but I have only implemented basic document creation thus far

Note: This is a proof of concept project, and is not supported by, nor endorsed by Adobe.

This has been tested using Claude Desktop, as well as custom agents, but in general should work with any client / code that supports MCP. In addition, any Adobe app that supports the UXP plugin API should be easy to add support for (it may also be technically possible to add support for other apps, but I haven’t looked into it).

How does it work?

It works by providing:

- A Python based MCP Server that provides an interface to functionality within Adobe Photoshop to the AI

- A Node based command proxy server that sits between the MCP server and Adobe app plugins

- An UXP based Adobe app (Photoshop, Premiere & InDesign) plugin that listens for and executes commands from the AI

Here is the communication flow and architecture:

⠀AI <-> MCP Server <-> Command Proxy Server <-> Photoshop / Premiere UXP Plugin <-> Photoshop / Premiere

The proxy server is required because UXP-based JavaScript plugins in Adobe apps can’t listen for incoming socket connections. They can only initiate outgoing ones as a client.

Using it is as simple as setting it up, and then telling the AI to do something in Photoshop.

Examples

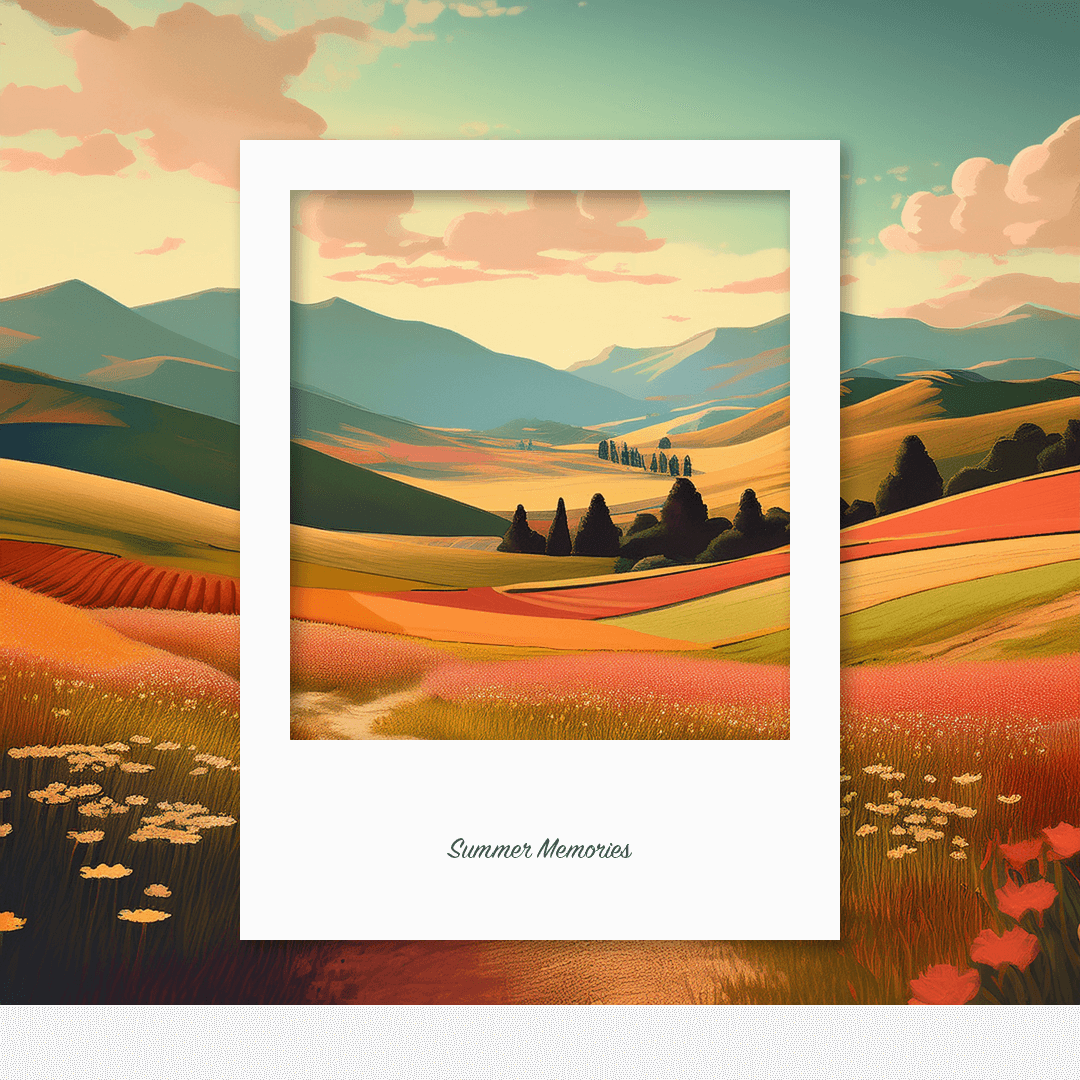

PROMPT: Create an instagram post that looks like a polaroid photo with a redwood forest in an illustrative style. you should use clipping masks to mask the phone, and make sure to check your work to ensure everything is setup correctly. if you use any text make sure the size is not too small. when you are done, write a step by step tutorial for me so i can recreate it in photoshop myself.

Here is a list of some examples / experiments:

- AI Agent for Photoshop / Rename Layers

- AI Agent for Adobe Premiere / Photoshop: Create Photo Slideshow

- AI Agent for Adobe Photoshop (MCP) : Create Instagram post

- AI Agent for Adobe Premiere : Create Music Video From Random Clips

- AI Agent for Adobe Photoshop (MCP) : Clean up layers

- AI Agent for Adobe Photoshop (MCP) : User Driven

- AI Agent for Adobe Photoshop (MCP) : Create a custom tutorial

- AI Agent for Photoshop / Describe Content in Photoshop File

- AI Agent for Premiere Pro / Create Sequences Based on Creation Date

- AI Agent for Photoshop / Follow a Tutorial

Most of these can by found in this YouTube playlist:

https://youtu.be/5p7oCdTVssk?si=SyI4kmmgJdT2P2dp

PROMPT: I just watched a youtube tutorial. I am going to give you the transcript. can your recreate what it is teaching? (use 300 pt for any text).

Areas for Improvement

This is a proof of concept project, and while it can actually be useful, there is a TON of room for improvement.

Not Production Ready

While the actual functionality and control works surprisingly well, it can be difficult to get setup, and requires some knowledge of working with the command line.

If Adobe were ever to release something like this, they would need to solve for bridging between the MCP and UXP plugins, which they could do in a couple of ways, including:

- Including their own proxy server as part of Creative Cloud desktop

- Building MCP support directly into the apps or Creative Cloud Desktop

- Adding support for UXP plugins to listen on a socket connection (right now can only connect to a socket, not open one)

Passing Images

Currently, to pass images between the app, MCP server and AI, they are written to the filesystem. At least for Photoshop, it should be possible to pass everything directly through the socket.

AI Vision

As it is implemented right now, it’s not possible to get the app output into the AI client without the user explicitly copy / pasting it, or exporting and loading a file. It should be possible to send the output via the MCP apis / protocol, which will make it much easier for the AI to check and monitor its work.

More APIs / Functionality

The MCP server works by explicitly exposing functionality API by API. While this is a bit tedious to code and leads to very large MCPs, it does ensure the AI has a much better understanding of what functionality is available and how to use it.

As it is right now, Photoshop has the most functionality exposed, although there is room for much more extensive expansion of API support across all three apps.

However, as more APIs are exposed, it may become necessary (or just make sense), to split specific app MCP functionality into multiple MCP servers.

Tips & Tricks

Compliment capabilities with other MCPs

adb-mcp works really well, but you can really extend its usefulness when you couple it with other MCPs.

A couple of I have found particularly useful (some of which I created specifically for some of the explorations):

- media-utils-mcp : MCP Server that I created that provides information / meta-data on Images and Video files (uses FFMPEG).

- filesystem MCP Server : MCP Server from Anthropic that provides access to the filesystem.

- memory MCP Server : MCP Server from Anthropic that provides a knowledge graph-based persistent memory system.

- image-vision-mcp : Simple MCP Server that I created that uses a local LLM to to provide descriptions of images.

Preload App Specific MCP Instructions

Each MCP server provides a resource that will insert instructions for using the MCP into the AI context. This can dramatically reduce mistakes and help the AI understand how to use the app.

Be Specific with Prompts

This is more general to working with LLMs, but the more specific you are in what you want the AI to do, the better results you will get.

In particular, the AI will often get “creative” and continue to add things once it has completed the task asked of it. If you don’t want it to do that, be clear about it.

Batch Calls

This is mostly if you are adding new APIs yourself, but when doing so, add support for batching calls as opposed to one call at a time. Given the back and forth between the server and AI needed for each call, this can dramatically speed things up.

For example, instead of:

def add_layer(layer_name:str):

do

def add_layers(layer_names: list[str]):

Thoughts and Observations

This is actually useful!

First and foremost, if you can get through the less than user-friendly setup and install, this can actually be really useful.

From a production standpoint, you can use it as a scripting tool on steroids that is easy to use, and often much more powerful.

For example, one of the running jokes in the Photoshop community is that no one names their layers! It can take you out of your flow, and even when you do name them, it can be difficult to keep a consistent naming scheme. This is a perfect task for AI.

Here is an example using the MCP server coupled with a simple image vision mcp I wrote that takes a real world Photoshop file created by Paul Trani and renames all of the layers based on their content:

https://www.youtube.com/watch?v=VhVrEb1ZmoA&list=PLrZcuHfRluqt5JQiKzMWefUb0Xumb7MkI&index=8

This is an example where the MCP server apis could be optimized a bit better for this type of task.

Other types of tedious tasks could include things like:

- Rename files

- Resize files

- Add watermarks to files

- Apply effects across files

- etc…

Can handle real tasks

One thing I was really interested in is if you could use the AI to treat Photoshop as an image / content generation engine. Instead of telling it what to do step by step, give it a task and let it figure out the steps.

The advantages of such an approach over other generative image AIs (such as Adobe Firefly), is that the end result is a multilayered PSD file that you can easily edit, modify or reuse.

You can see an example of this approach in this video, where I ask the AI to create a Photoshop file for an Instagram post that looks like a Polaroid picture.

This is a task that requires multiple steps, and an understanding of the approach to take to create it as well as how to use Photoshop and its tools to accomplish it.

While it doesn’t always get everything right the first time, it does a surprisingly good job, and often makes very useable content. What I have found is the request above is about the current maximum level of complexity it can handle by default. I think you could extend this by taking a more modular approach, and especially coupling it with a memory MCP (more on that below).

The AI seems to be “Creative” sometimes

This is not the place to get into a discussion about what it means to be truly creative, but there have been a number of instances where the AI would surprise me with its approach, often going beyond my specific ask and adding little flourishes.

For example, early in my experimentation, I asked it to do some task where it was generating images within a design, and it decided to add a vignette to it to give it a more authentic feel. Not only had I not asked it to do that, but the MCP server also didn’t have functionality for creating vignettes. It was able to figure out how to use existing tools to replicate one (making a selection, inverting it, filling it and then blurring).

Other examples include small flourishes, like tilting the Photo at an angle, or adding piece of tape to make it look like it is taped on a wall.

Now, I don’t think this would fall under truly “creative”, but it demonstrates actions that feels like it is being creative both in what its making, but also in how its approaching solving the tasks.

Context is super important

This is probably pretty obvious, but the more context / info the AI has, the better it can perform. Many times I would ask it to perform a task, it would mess up and I would correct it. If you then ask it to perform the task again with the previous context, it can get much further along.

Again, this probably falls under “duh”, but it opens up the possibility of really expanding capabilities both through larger context windows, more advanced prompts, custom trained LLMs, and using memory MCPs.

Memory MCPs really extend functionality

Related to the point above, using memory MCP servers, you can train the AI to learn more complex, multi-step tasks. In my testing, it could take about 30 to 45 minutes going back and forth with the AI, correcting mistakes, and directing until it could learn a task around the complexity of creating an Instagram post that looks like a Polaroid image.

I found that this could dramatically expand the complexity of the task the AI could accomplished, and the overall quality of the output.

While that is a lot of time to train for a single task, it opens up the possibility of automating that training, or even pre-trained LLMs that are app specific.

Photoshop as context for AI

All of my experiments thus far have focused on using the AI to control the tools to create or help with content. However, I think it’s important to also recognize the MCPs servers as an input for AI context. Specifically, it could allow direct input from Photoshop, InDesign to provide information for context for LLMs.

For example, maybe you are asking for an AI to write some code, and want to give direction on the UI? The AI could use the MCP to get the UI mockup directly from Photoshop. This is what Figma is doing with their Dev Mode MCP Server.

You can see a simple example of that in this video showing AI accessing Photoshop and describing the content.

While there are issues with binary compared to text based formats, the first step is make it as easy as possible for the AI to get the input. It may not matter if the source format is binary, if it can be converted to a format / description that the AI can use.

Optimizing app output formats for AI as opposed to humans / other apps, is an area that Adobe should probably explore further.

As a Key Component for an AI first workflow

Related to the point above, if you see the future as one starting with AI to organize, coordinate and manage projects (such as in this video where Josh Cleopas uses ChatGPT to manage his design workflow), its going to be vital that the actual tools used to create / edit / tweak content fit seamlessly within that workflow.

These MCP servers can start to fill that role for Adobe creative tools. While there may ultimately be better solutions, MCP exists today, opening up space to explore and learn how to integrate creative tools into these AI driven workflows.

There’s a lot more to explore, especially around making this seamless for non-developers, improving feedback loops, and rethinking app roles in an AI-first world. I’ll try and dive deeper into those topics in future posts.

For now, if you’re interested in experimenting, check out the GitHub repo or the YouTube playlist for demos.